A Benchmark for Modeling Violation-of-Expectation in Physical Reasoning Across Event Categories

Abstract

Recent work in computer vision and cognitive reasoning has given rise to an increasing adoption of the Violation-of-Expectation (VoE) paradigm in synthetic datasets. Inspired by infant psychology, researchers are now evaluating a model’s ability to label scenes as either expected or surprising with knowledge of only expected scenes. However, existing VoE-based 3D datasets in physical reasoning provide mainly vision data with little to no heuristics or inductive biases. Cognitive models of physical reasoning reveal infants create high-level abstract representations of objects and interactions. Capitalizing on this knowledge, we established a benchmark to study physical reasoning by curating a novel large-scale synthetic 3D VoE dataset armed with ground-truth heuristic labels of causally relevant features and rules. To validate our dataset in five event categories of physical reasoning, we benchmarked and analyzed human performance. We also proposed the Object File Physical Reasoning Network (OFPR-Net) which exploits the dataset’s novel heuristics to outperform our baseline and ablation models. The OFPR-Net is also flexible in learning an alternate physical reality, showcasing its ability to learn universal causal relationships in physical reasoning to create systems with better interpretability.

VoE Dataset

Download the dataset used for experimentation, along with the annotations from here

Dataset Structure

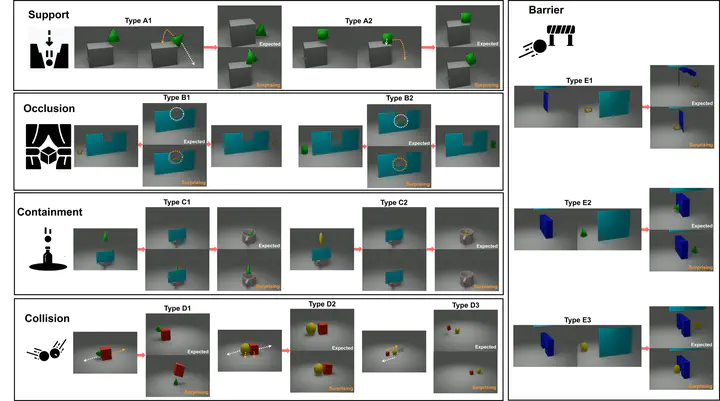

The dataset consists of five violations of expectation event categories: A. Support B. Occulsion C. Containment D. Collision E. Barrier

There are 500 trials in each event category (75% Train, 15% Val, 10% Test). The train folder contains only expected videos while the validation and test folders contain both expected and surprising videos. Surprising and expected videos with the same stimuli have the same trial ID.

Each trial folder has an rgb.avi which is the RGB video. The folder also has a physical_data.json which contains the ground truth values of features, prior rules and posterior rules. There is also a scene_data.json which has frame-by-frame values of the position and rotation of all entities (object, occluder, barrier, support, container) along with the instance IDs of all entities.

Download utils.py to generate frames and videos of the depth and instance segmented images from depth_raw.npz and inst_raw.npz located in every trial folder. Do edit the settings section of utils.py to your needs. If you need the complete version of the dataset (instead of what was used for experimentation), you may refer to the code below to generate via Blender

Dataset Generation System Requirements

- Blender 2.83 with Eevee engine

- Python 3.6

Code for Dataset Generation & Model Experimentation

The code repository can be found here

Dataset Generation

The dataset must be generated in blender’s python API for version 2.93.1. The generation uses the bpycv library for generation. The python environment requirements can be found in dataset_generation/requirements.txt. The variations are fixed for this dataset and are specified in the CSV files.

Models

To train the model with the data, set up the models/setup.yaml file and run python models/main.py using the environment from models/requirements.txt. Note that resnet3d_direct refers to the baseline model. You must set the ABSOLUTE_DATA_PATH to the absolute path to the data. An instance of the config can be seen as such:

###############################################

# training and testing related configurations #

###############################################

TRAIN: true

TEST: true

TRAINING:

# options: "A", "B", "C", "D", "E"

EVENT_CATEGORY: "A"

# options: "resnet3d_direct", "random", "OF_PR", "Ablation"

MODEL_TYPE: "random"

# path to save experiment data *relative to main.py*

RELATIVE_SAVE_PATH: "experiments/"

# path to save dataset objects for quick loading and retrieval *relative to main.py*

RELATIVE_DATASET_PATH: "datasets/"

# path to AVoE Dataset (with the 5 folders for each event category)

ABSOLUTE_DATA_PATH: ''

# path to state_dict of model to load (best_model.pth) *relative to main.py*

RELATIVE_LOAD_MODEL_PATH: null

# type of decision tree. Options: "normal", "direct", "combined"

DECISION_TREE_TYPE: "combined"

# to use oracle model for observed outcome

SEMI_ORACLE: true

# to use gpu or not to

USE_GPU: true

# learning rate for training

LEARNING_RATE: 0.001

# number of epochs

NUM_EPOCHS: 10

# batch size for training

BATCH_SIZE: 16

# to use pretrained wights for transfer learning

USE_PRETRAINED: true

# whether to freeze pretrained weights

FREEZE_PRETRAINED_WEIGHTS: true

# set the random seed

RANDOM_SEED: 1

# optimizer type. Options: ['adam', 'sgd']

OPTIMIZER_TYPE: "adam"

# dataset efficiency (cpu) Options: ['time', 'memory'] time loads entire dataset into CPU

DATASET_EFFICIENCY: 'time'

TESTING:

# options: "A", "B", "C", "D", "E", "combined"

EXPERIMENT_ID: null

# path to save experiment data *relative to main.py*

RELATIVE_SAVE_PATH: "experiments/"

# path to save dataset objects for quick loading and retrieval *relative to main.py*

RELATIVE_DATASET_PATH: "datasets/"

# path to AVoE Dataset (with the 5 folders for each event category)

ABSOLUTE_DATA_PATH: ''

# type of decision tree. Options: "normal", "direct", "combined"

DECISION_TREE_TYPE: "combined"

# to use oracle model for observed outcome

SEMI_ORACLE: true

# to use gpu or not to

USE_GPU: true

# dataset efficiency (cpu) Options: ['time', 'memory'] time loads entire dataset into CPU

DATASET_EFFICIENCY: 'time'

Acknowledgements

This research is supported by the Agency for Science, Technology and Research (A*STAR), Singapore under its AME Programmatic Funding Scheme (Award #A18A2b0046). We would also like to thank the National University of Singapore for the computational resources used in this work.